Intro This post is intended to be my brain-dump on implementing Azure Event Hubs. The repo for this solution can be found here. Specification I prefer to write specifications before implementing interpreters. This exercise encourages me to interrogate any premature design decisions (that I might have made). The following specification was written for publishing an …

F# with Azure Blob Storage

Repo The repo for this solution can be found here. Specification Library I often write compilable specifications for business apps. The process encourages moderate upfront-design considerations before codifying. Code The following 'Operations' module serves as an outline for blob storage operations: namespace BeachMobile.ImageService open System.Threading.Tasks open Language module Operations = module List = type ByContainer …

Data Retrieval with Azure CosmosDB and Redis

CosmosDB and Redis are two data stores with different responsibilities. CosmosDB is a NoSQL data store that can serve as an alternative to SQL Server when using its SQL client API. Redis is a data cache technology that is meant to reduce latency, cost, and computing between client and server. The repo for this brain …

Continue reading "Data Retrieval with Azure CosmosDB and Redis"

Comparing Software Development to the Game of Chess?

I asked Chat GPT the following:What can software development stakeholders learn from the game of chess? Chat GPT: Strategic Thinking: Chess is a game of strategy, and software development requires a similar mindset. Stakeholders can learn to think strategically by considering long-term goals, anticipating potential obstacles, and planning ahead to achieve desired outcomes. Decision-Making: Chess …

Continue reading "Comparing Software Development to the Game of Chess?"

How I would Approach a Xamarin to .NET MAUI Migration

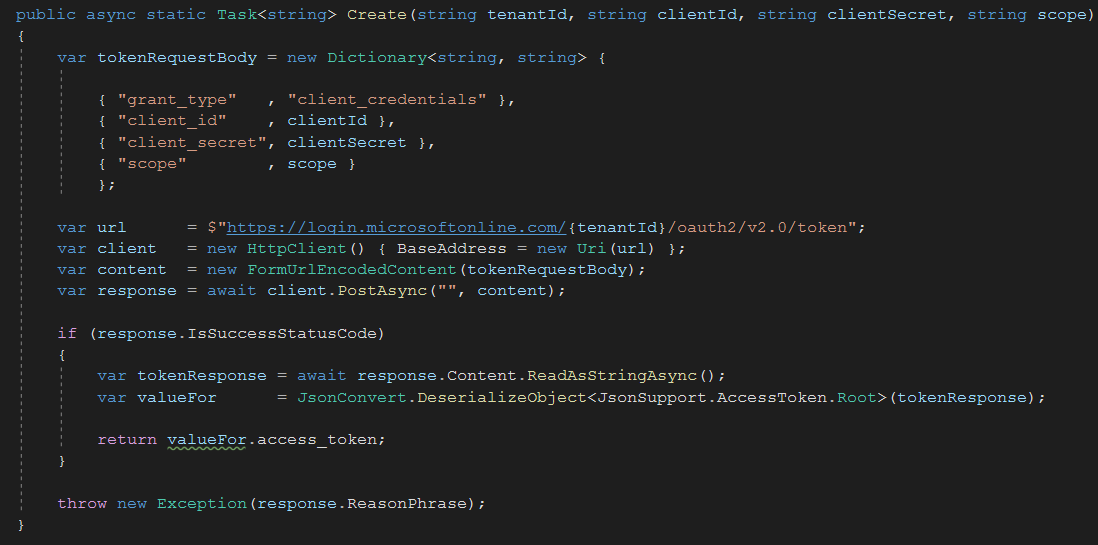

How to Create a Bearer Token

Intro Here's the code that I learned to create for generating an authorization token: Writing the Test The following test was written to generate a bearer token: [<Test>] let ``get authorization token``() = let section = ConfigurationManager.GetSection("section.bearerToken") :?> NameValueCollection; let kvPairs = section.AllKeys.Select(fun k -> new KeyValuePair<string, string>(k, section[k])); let tenantId = kvPairs.Single(fun v -> …

F#: When HttpClient PostAsJsonAsync no longer works

Intro I recently upgraded all of the NuGet packages inside my Visual Studio solution. The result was breaking changes at runtime. Specifically, HttpClient::PostAsJsonAsync was resulting in an empty JSON value. The following HttpClient::PostAsJsonAsync example of such code: use client = httpClient baseAddress let encoded = Uri.EscapeUriString(resource) let! response = client.PostAsJsonAsync(encoded, payload) |> Async.AwaitTask Here are …

Continue reading "F#: When HttpClient PostAsJsonAsync no longer works"

Pulumi: How can a Function App reference a connection string from a SQL Server Database declaration?

Using Pulumi, I recently learned how to obtain a connection string from SQL Server database declaration. I needed this connection string so that I could register its value in an App Setting. I learned that Pulumi provides the ConnStringInfoArgs type to accommodate this need. Here's some example code below: using System; using Pulumi; using Pulumi.AzureNative.Resources; …

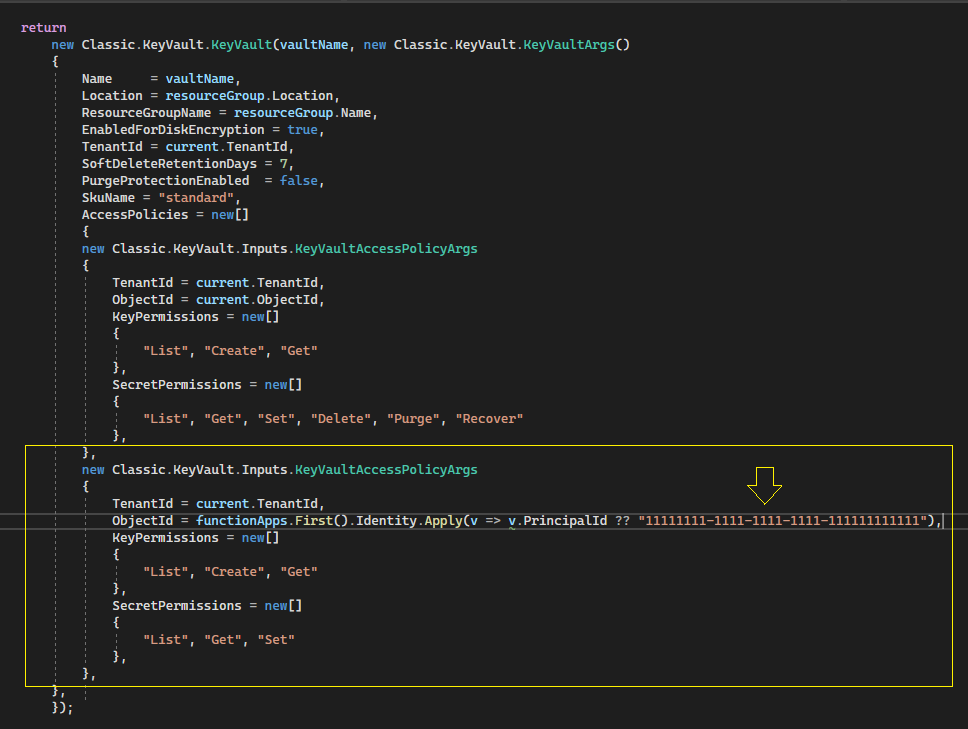

Pulumi: Function App, Key Vault, and Access Policy

My Brain Dump How can a Function App access Azure Key Vault secrets? Known Issue and workaround: I referenced the issue I was observing and used the recommended work around. Below is the workaround. Function App First, provide an identity value to the Function App declaration: Identity = new FunctionAppIdentityArgs { Type = "SystemAssigned" }, …

Continue reading "Pulumi: Function App, Key Vault, and Access Policy"

Pulumi Code Examples

My hobby project consists of building a mobile delivery platform. Thus, I needed some Infrastructure as Code (aka: IaC) to provision deployments. My goal was to have code structured as follows: Fortunately, I was able to learn the basics and write the proof of concept in a short amount of time. Here's some clients to …